Canary deployments in Kubernetes have proven to be valuable in my development workflows, especially when introducing new features to a broad user base without causing downtime or disrupting the user experience. Canary instances allow for a gradual increase in load (e.g., 5%, 25%, 50%, 100%) to progressively test the instance for production stability.

By releasing updates to a targeted or sample group of users, issues can be swiftly identified, and valuable feedback can be collected to fine-tune the deployment. This real-time user behavior and preference data also provides essential insights for future development efforts.

Why Choose Canary Deployments in Kubernetes

Opting for Canary deployments within a Kubernetes environment is a wise decision, as it helps mitigate potential issues when updating your applications. In addition to the benefits mentioned earlier, Kubernetes furnishes a production-like environment for effective real-world testing and quality assurance, ensuring that any problems are detected before reaching a wider audience.

With zero-downtime updates and automated rollback capabilities, applications remain available and reliable during deployment, delivering a seamless user experience. Moreover, Kubernetes optimizes resource utilization by employing minimal replicas during testing, aligning perfectly with modern DevOps practices, thereby enhancing the overall reliability and stability of application updates within Kubernetes environments.

How Canary Deployment Works in Kubernetes

Let’s break down how canary deployments function within Kubernetes step by step:

1. Initial Setup

Set up a Kubernetes cluster with the existing version of your application

Prepare the new version of your application

2. Create Canary Deployment

Generate a new Kubernetes deployment for the updated application version, representing the canary deployment

4. Observation and Monitoring

Monitor the new version’s performance as traffic shifts

Use metrics, logs, and observability tools for performance assessment.

5. Manual/Automated Rollback

Establish automated rollback in Kubernetes to switch all traffic back to the old, stable version if issues arise or have a process and information in place for a manual rollback.

6. Gradual Scaling

If the canary deployment performs well, incrementally increase traffic on the new version.

Continue monitoring

7. Full Rollout

Shift 100% of traffic to the new version once you are confident it is stable

8. Clean-up

After a successful rollout to the new version of the deployment, remove old deployment resources accordingly.

Challenges and Considerations for Canary Deployments in Kubernetes

While canary deployments in Kubernetes offer numerous advantages, they do present some challenges and considerations:

Complexity

Setting up and managing canary deployments in Kubernetes can be complex, especially for teams new to Kubernetes. It requires a deep understanding of Kubernetes concepts and tools.

Tooling

Kubernetes lacks built-in support for canary analysis, which involves comparing metrics and determining the deployment’s success. This means that additional tools or custom scripts need to be integrated into the deployment process to collect and analyze metrics.

Monitoring and Metrics

Collecting and analyzing metrics to evaluate the success of a canary deployment can be challenging. Integrating external tools or custom scripts may be necessary to gather the required data.

Rollback Strategy

Having a well-defined rollback strategy is crucial in case issues arise during the canary deployment. This ensures that you can quickly revert to the stable version to minimize user impact.

When considering canary deployments in Kubernetes, it’s essential to carefully evaluate these challenges and plan accordingly to ensure a successful deployment.

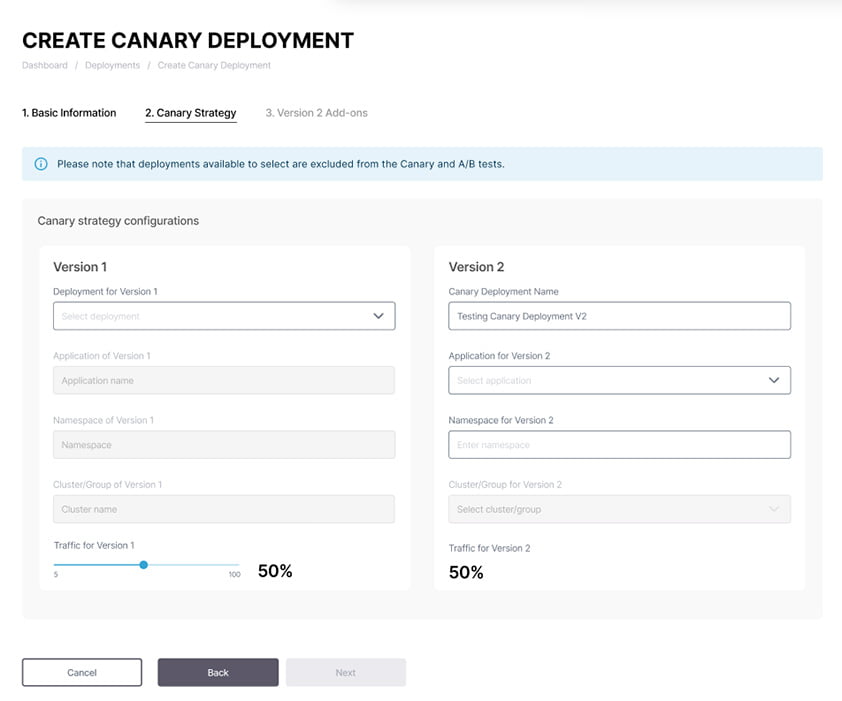

Canary Deployment with CAEPE

Simplifying deployment, as I’ve mentioned in other articles, is a core theme in CAEPE™. From CAEPE’s user-friendly interface, implementing Canary Deployment is simple. Learn more here.

Conclusion

Canary deployments in Kubernetes offer developers a powerful strategy for introducing new features and updates without causing disruptions to the user experience. By progressively testing updates with a subset of users and monitoring performance, teams can quickly catch and address issues. Kubernetes enhances this process by providing a production-like environment with zero-downtime updates and automated rollback capabilities.

However, it’s crucial to be aware of the complexities involved, such as tooling requirements, monitoring challenges, and the need for a solid rollback strategy. Developers looking to optimize their deployment processes should consider Canary Deployments in Kubernetes as a valuable tool in their arsenal, and solutions like CAEPE can make the process even more straightforward.

CAEPE Continuous Deployment

Manage workloads on Kubernetes anywhere robustly and securely.

- Shores up security by simplifying deployment anywhere, supporting managed services, native Kubernetes, self-hosted, edge and secure airgapped deployment targets.

- Supports GitOps and provides guided, UI-driven workflows for all major progressive delivery strategies.

- Has RBAC built-in, providing inherent enterprise access control for who can deploy.

- Supports extended testing capabilities enabling your team to run different tests quickly and easily.