Having worked with Kubernetes for a while, I’ve observed recurring challenges that DevOps teams encounter when initiating application deployments in Kubernetes environments.

Ensuring secure and consistent Kubernetes application deployment can often feel like trying to herding caffeinated kittens on a trampoline. While the comparison may be slightly exaggerated, deploying applications in a reliable and secure manner while maintaining a stable environment within Kubernetes is not simple.

In this article, I will explore common challenges and provide practical approaches for effectively managing them. Whether you are new to Kubernetes or seeking to enhance your existing knowledge, hopefully these insights will assist in expediting your journey.

When it comes to application deployment in Kubernetes, areas DevOps teams need to watch out and plan for include:

Role-based Access Controls (RBAC) in Application Deployments

Role-based Access Controls (RBAC) for Kubernetes manage user permissions, ensuring only authorized actions can be performed within the Kubernetes cluster.

However, native Kubernetes does not provide any user interface for RBAC. Within Kubernetes, RBAC is managed via the command line.

Impact? If you are scaling the usage of Kubernetes, this will be finicky to manage. As the number of users grow, it is impractical to go back and forth to the terminal to check roles and users.

Solution: From the onset, plan for a single control plane to easily manage RBAC in terms of service account, user, roles, cluster roles and bindings. This can be done through the integration of 3rd party tools.

Node Isolation

The second common challenge is node isolation. Node isolation in Kubernetes involves separating cluster nodes based on specific criteria, improving security, compliance, and resource allocation.

Things to think about when implementing node isolation:

Network Traffic Management

Network traffic is another area which is challenging to manage out-of-the-box for Kubernetes. Compliance typically requires full control over your network, to monitor what’s coming in and going out and information isolation as security best practice.

When you are running applications across multiple and/or hybrid clusters and using different network drivers, complexity multiplies.

That is why modern service mesh tools such as HashiCorp Consul and Istio have become increasingly popular. They focus on secure network traffic isolation and communication.

Besides service meshes, there are drivers that provide for easy installation and integration into Kubernetes such as Cilium and Calico.

Audit Logging

Let’s talk about audit logging, the compliance and security superhero that tells us who did what and when. Whilst Kubernetes does provide robust logging, to what we have in the case of RBAC, Kubernetes logging is terminal-based – it is not practical to access the logs all the time.

Ideally, what’s needed is a centralized logging system where all the logs are aggregated, and you can filter the logs as per your requirements. You should be able to set up thresholds and alerts when something is not going correctly or if you detect some abnormality.

There are a lot of popular tools with native integration to Kubernetes such as Elastic Stack and Grafana Loki, that you can easily implement to simplify audit logging.

Progressive Delivery Strategies

Progressive Delivery Strategies is one final area in which I’ve personally faced a lot of challenges. There are multiple types of Kubernetes deployments such as Standard, Blue-Green, Recreate/Highlander, Rolling/Ramped, A/B and Canary. A/B and Canary deployments are especially popular where teams have a high velocity of deployments and do not want to disrupt production traffic.

This area is challenging because firstly it is an area in which Kubernetes provides the ingredients but not the recipes. Secondly, you need a good understanding of what deployment strategy fits your specific use case. Thirdly, you need to consider the variables that exist outside your deployment.

The ingredients are there, not the recipes

In Kubernetes, objects are building blocks used to define the structure, configuration, and behavior of the system. Each object represents a specific resource or component within the cluster. With Kubernetes objects, you can achieve most deployment strategies unless there is an exceptional requirement.

However, there is no roadmap or template with the specific steps needed for the deployment strategy. You need the expertise or the time to learn, try and test.

What is the right deployment strategy for my use case?

The deployment strategy you choose is also dependent on your specific use case. It is (highly) advisable to spend some time to brainstorm and test the right deployment strategy for your use case. For example, if your requirement is to test features against production traffic and route traffic based on defined results, you might go with a A/B or Canary deployment strategy.

Time invested upfront to map your use case with the appropriate deployment strategy/strategies will smoothen the work during the implementation phase. Understanding the variables and having a clear task list upfront will save a lot of heartache, I can’t emphasize that enough.

Variables beyond your deployment

When you factor in variables outside your deployment, the complexity increases. For example, if your Kubernetes cluster is hosted on cloud and using its managed cloud services like load balancers etc, you need to factor this into your implementation plan.

Progressive Delivery Strategies with CAEPE

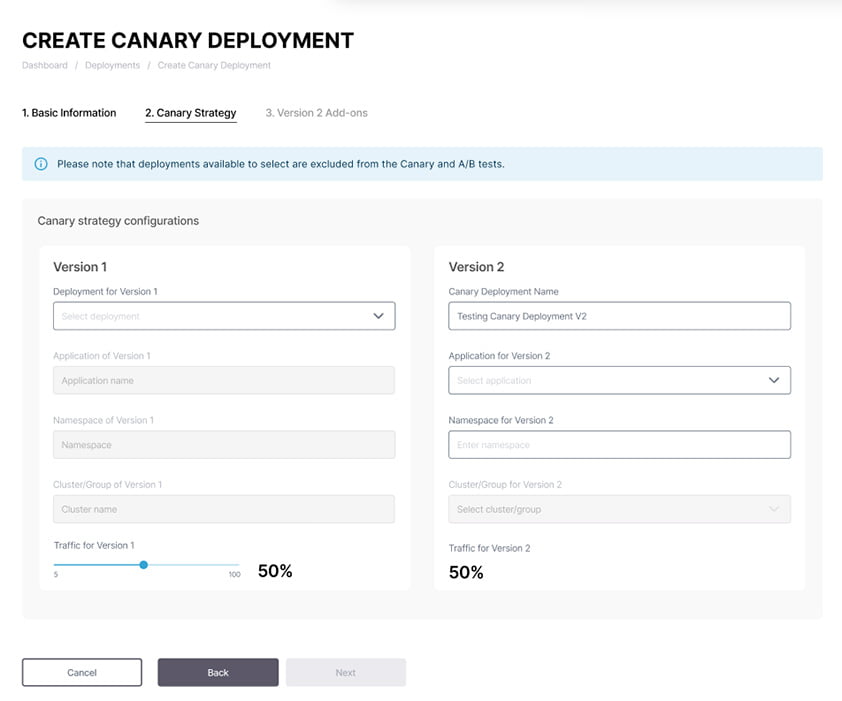

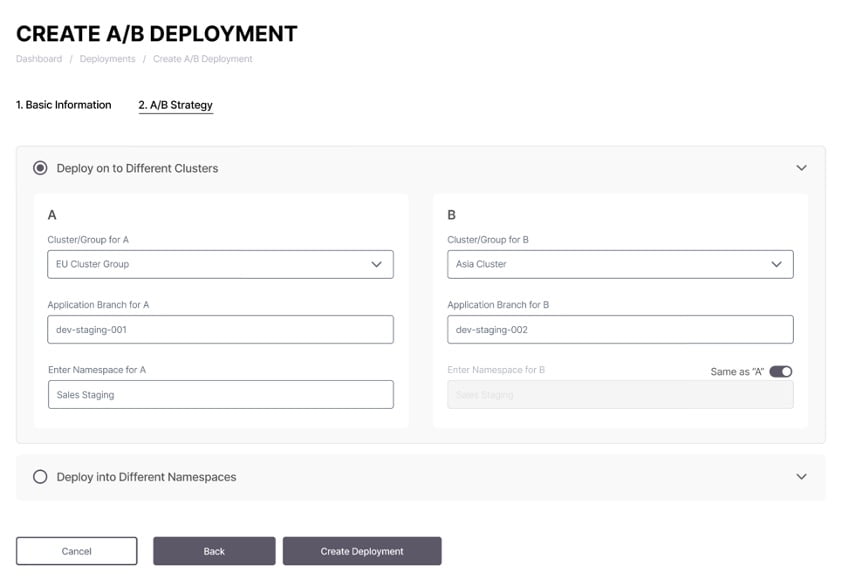

As mentioned, this is an area in which I’ve personally faced many challenges (despite being an advanced Kubernetes user). That’s why I’m excited about the CAEPE™ features we have developed to support deployment strategies.

One of our main goals with CAEPE is to make progressive delivery strategies accessible to every development team member.

CAEPE supports all major strategies, including Standard, Blue/Green, Recreate/Highlander, Rolling/Ramped, A/B and Canary deployments.

For each deployment strategy, CAEPE offers guided, UI-based workflows that allow users to deploy, control routing, and tag new versions efficiently.

With CAEPE’s user-friendly interface, you can specify the deployment strategy you want to use and be guided through a wizard-like experience to ensure the correct execution of your applications and services.

See snapshots below of the workflow for deploying Canary and A/B strategies:

Conclusions

I started this article saying safe and consistent application deployment on Kubernetes is like herding caffeinated kittens on a trampoline. As you can see, there are multiple things to consider and Kubernetes is very much still an evolving technology with a lot going on under the hood and a lack of simple ways to get things done.

Major areas to watch out for include Role-Based Access Controls (RBAC), node isolation, network traffic management, audit logging, and progressive delivery strategies.

- Native Kubernetes lacks a user interface for RBAC management, necessitating integration of third-party tools for efficient control.

- Node isolation requires careful consideration of application-specific requirements and security compliance.

- Network traffic management is complex, but service mesh tools and drivers can assist in achieving secure traffic isolation.

- Audit logging is crucial for compliance, and integrating tools simplifies centralized log aggregation and analysis.

- Finally, implementing progressive delivery strategies, such as A/B and Canary deployments, requires understanding Kubernetes objects and testing them for specific use cases.

Conquering these five areas will go a long way to ensuring peace of mind when it comes to your Kubernetes application deployments.

CAEPE Continuous Deployment

Manage workloads on Kubernetes anywhere robustly and securely.

- Shores up security by simplifying deployment anywhere, supporting managed services, native Kubernetes, self-hosted, edge and secure airgapped deployment targets.

- Supports GitOps and provides guided, UI-driven workflows for all major progressive delivery strategies.

- Has RBAC built-in, providing inherent enterprise access control for who can deploy.

- Supports extended testing capabilities enabling your team to run different tests quickly and easily.